Translated from “Copilot kan påvirke utøvelse av offentlig myndighet”, Finding #2. This one is a really interesting finding and I am thankful the authors brought it up. My observation after more than a decade here as an immigrant is that trust in the government is quite high and it is warranted. Transparency and fairness are core to a healthy society. I would not characterize Copilot for Microsoft 365 as transparent.

Product Transparency

On the transparency side, Microsoft has done a relatively poor job of exposing technical indicators of Copilot health. As admins, we’re sorely lacking information on the health of indexing and other related Copilot services in a tenant. Anecdotally, I have some tenants where the index is very clearly supplying an unintended set of responses to queries where we find the correct information available in simple SharePoint search. The responses are so wrong that it points to a clear defect in the service’s functionality. We need something to indicate health, richness score or some other numerical indicator of progress of the indexing, depth, general metrics, etc.

Adding to the complexity is the change over time and per user, in terms of responses. The NTNU report points out that an auditable historical basis for answers given by Copilot for M365 would be necessary should it be used as a basis for a decision. That’s going to be very hard when a user gets a different response from a Copilot for Microsoft 365 summary generated in-line with Word vs. one generated in the ribbon-invoked chat window. These are necessarily different; Copilot for M365 chat window maintains a context over the session lifetime and this will influence the response. With both a tenant-level index and a user-level index, we see further possibility of drift from standard answers. This is, of course, part of the point of Copilot: customization with ‘your’ data (whomever ‘your’ is in this context–kommune, saksbehandler, new employee).

With these attributes, In terms of implementing Copilot for M365 in the public sector, the use cases will need to be made clear and tested for efficacy. Copilot for M365 is not proposed as a replacement for saksbehandlers/caseworkers, for example, but rather as a tool to augment daily productivity of workers.

As with much of this report, we see a blurring between “Copilot” (often unspecified, but assumed to mean Copilot for Microsoft 365) and AI in general. Some of the assumptions made about Copilot for M365 have strayed very far from technical correctness. That said, we need to talk about this important topic. We need to talk about what we do and do not use copilots (lowercase) for.

We can help safe adoption by making the shortcomings clear, by setting known-safe use cases in situations where public good or personal safety is at risk in conjunction with an employee’s job, and by thinking before we implement.

Previous Lessons Learned – We’ve done this before!

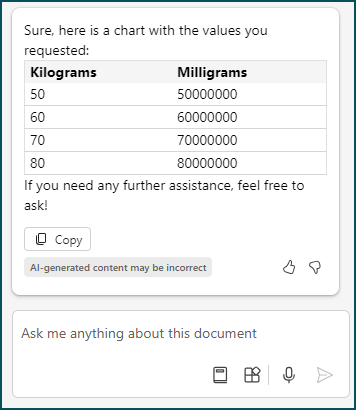

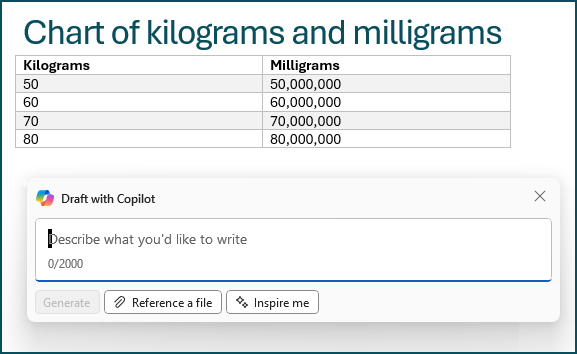

I could probably stop here because the above paragraph is good advice. I want to go on an think about what we have augmented with tech tools in the past. Let’s imagine the first user of Excel in a medical department (I know it wasn’t the first such software, but bear with me, if I started by mentioning Lotus 1-2-3 I’d lose more than half of you). We need a chart for dosing Heparin which is done by weight, 1mg per kg. Weight + Calculation = Dosing. A quick formula and you have the output. Grab the fill handle, drag, and you have a chart. To a user, what exactly is the difference between creating this in Excel, and then asking for the same to be created by Copilot for M365? Is there a difference to the average user?

Copilot for M365 is not accomplishing this task in the same way as Excel, but can we expect users to know this? Do we remember a time when we had to explain that one could control the number of spaces after a decimal by clicking a button or formatting a column? We expect users to know that today.

As for the aforementioned dosing chart, while I could not in a few minutes of testing cause it to produce a chart with wildly incorrect information, from what we know of how this and other AI products work (and how neural networks & LLMs function in general), we cannot rely on it for more complex maths. Both charts produced by invoking the in-line Draft with Copilot and the ribbon-invoked chat window produced correct results. The in-line one, however, added commas to separate every 3 numbers. In Norway, that’s a decimal place. A human would have a reasonableness check that would alert that in this context we can ignore the commas. We cannot count on this reasonableness check by a person in terms of human safety.

Successful Implementations Take Careful Consideration

Instead of high-risk tasks, we can use tools to help format and to generate basis drafts. We can ask Copilot for M365 to summarize any emails we have sent to ensure follow-up was completed (Outlook, M365 chat). We can ask other’s to evaluate us (Forms). We can use Copilot in meetings to make sure we covered all the points on the agenda (Teams). We can use Copilot to transcribe to ensure understanding (Teams, Summarize meetings). We can generate empty tables (Word, OneNote), draft basic ideas for improving our department (Whiteboard), we can handle our spreadsheets better by asking for coaching on concatenating or splitting columns (Excel), we can find points of interest in data we may not have had the time to previously (Excel, Copilot). All of the above tasks would free up time for an expert to apply that expertise to higher-risk or higher-impact tasks. Afterall, isn’t this the point of productivity tools?

Take away from this one is that we need to take the time to do the analyst work. We need to be diligent in establishing and knowing our safe use cases, understand the risk associated with a given user’s position, and test our more risky use cases with expert workers.

Discover more from Agder in the cloud

Subscribe to get the latest posts sent to your email.